I found that Google adsense is not able to detect the content in my website. The main reason is that Google does not execute JS when crawling content. So I need SSR.

Please guide me how to implement SSR in answer project.

I found that Google adsense is not able to detect the content in my website. The main reason is that Google does not execute JS when crawling content. So I need SSR.

Please guide me how to implement SSR in answer project.

I found the reason for this problem. The default robots file prevents the api from being crawled. Search engines can't fill the page if they can't get the data.

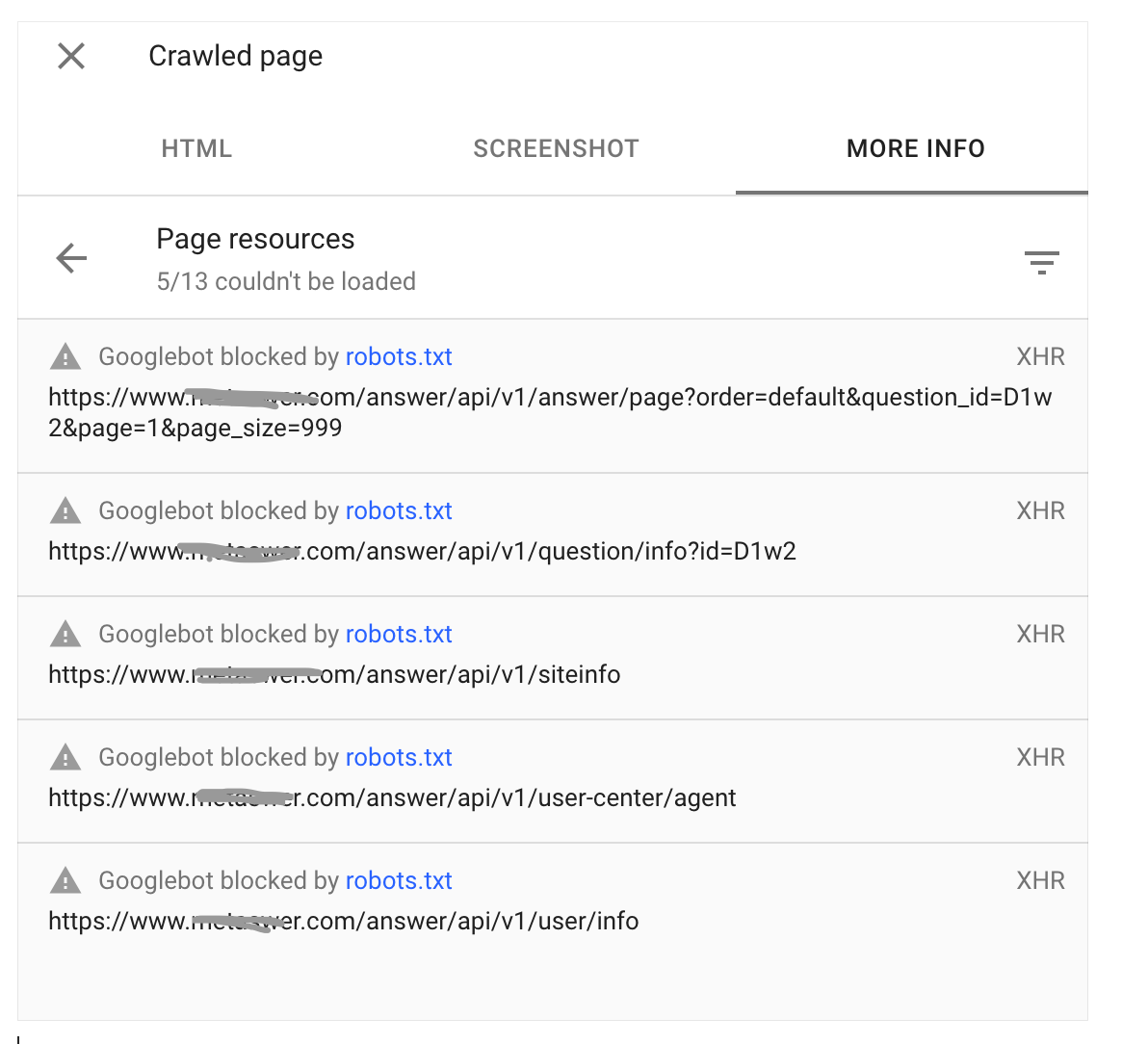

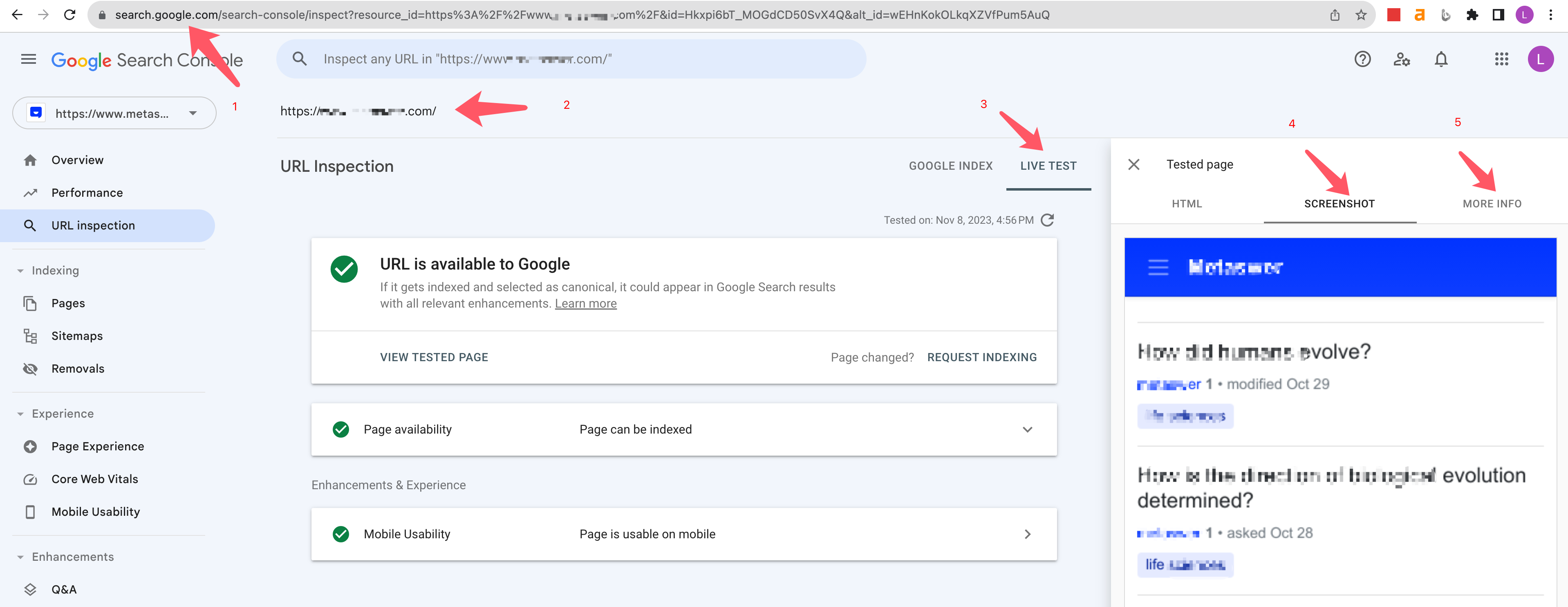

I use Google Search Console's crawler. If you disable Google robots from crawling API related links, then no results will appear in step 4 in the image below. In the step 5, you will get a prompt that API-related links are blocked by the robots file, causing Google robots to be unable to crawl API-related links.

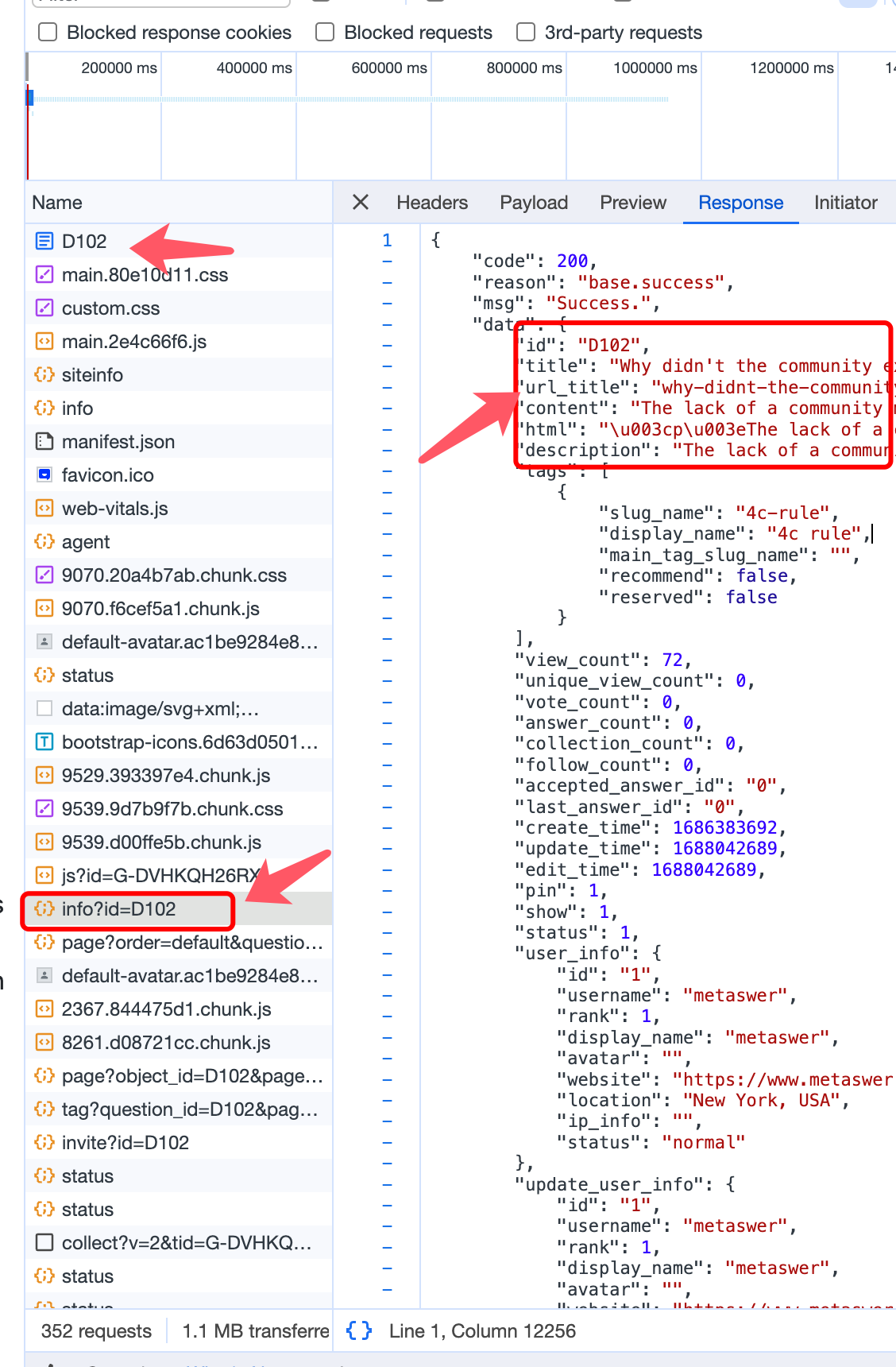

If API related content is currently rendered on the backend, then I should not see API related links in the chrome browser development tools. But I can currently see these API related links.

The image below clearly shows the API related links. Without these APIs to get content from the backend, the web page would have no content.

Googlebot is a crawler program used by the Google search engine, which is responsible for accessing and crawling web content and adding it to Google's index. Googlebot is automated and navigates from one webpage to another by following links while crawling and parsing the webpage's content.

Google Search Console (formerly Google Webmaster Tools) is a set of tools designed to help website owners monitor and optimize their performance in Google search results. It provides a range of features, including submitting and validating websites, viewing search analytics data, managing site maps, checking and troubleshooting errors, and more.

The usage scenarios of the two are different, So I say your usage scenario has nothing to do with SSR.

In fact, our SSR is rendered using a background template, that is, when crawling content through Googlebot, the entire document will be returned directly through the server. At this time, api requests will not be made, so we disabled api access in the robot. From your description, it seems that you need to pass Google Ads certification. Does this certification require the use of SSR?

@shuai If API related content is currently rendered on the backend, then I should not see API related links in the chrome browser development tools. But I can currently see these API related links. If access to these API links is blocked. The page will not get the data. So the page will show only the frame content.

You misunderstood what I meant. I mean that in SEO, the content crawled by Google is rendered through the back-end template file. When the crawler requests it, the complete content of the page is spliced directly on the server, just like As discussed in discord, the page content does not need to be obtained through the API at this time.